dropwatch 网络协议栈丢包检查利器

原创文章,转载请注明: 转载自系统技术非业余研究

本文链接地址: dropwatch 网络协议栈丢包检查利器

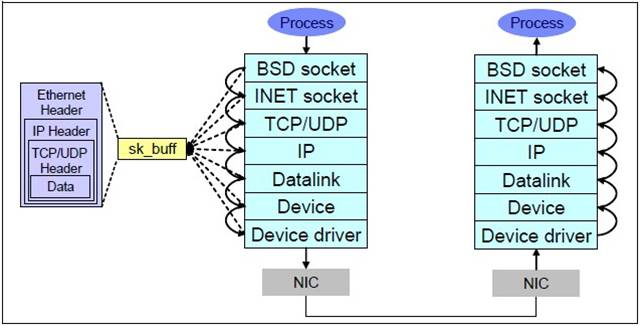

在做网络服务器的时候,会碰到各种各样的网络问题比如说网络超时,通常一般的开发人员对于这种问题最常用的工具当然是tcpdump或者更先进的wireshark来进行抓包分析。通常这个工具能解决大部分的问题,但是比如说wireshark发现丢包,那深层次的原因就很难解释了。这不怪开发人员,要怪就怪linux网络协议栈太深。我们来看下:

这7层里面每个层都可能由于各种各样的原因,比如说缓冲区满,包非法等,把包丢掉,这样的问题就需要特殊的工具来发现了。 好了,主角dropwatch出场.

它的官方网站在这里

What is Dropwatch

Dropwatch is a project I am tinkering with to improve the visibility developers and sysadmins have into the Linux networking stack. Specifically I am aiming to improve our ability to detect and understand packets that get dropped within the stack.

Dropwatch定位很清晰,就是用来查看协议栈丢包的问题。

RHEL系的系统安装相当简单,yum安装下就好:

$ uname -r

2.6.32-131.21.1.tb477.el6.x86_64

$ sudo yum install dropwatch

man dropwatch下就可以得到使用的帮助,dropwatch支持交互模式, 方便随时启动和停止观测。

使用也是很简单:

$ sudo dropwatch -l kas Initalizing kallsymsa db dropwatch> start Enabling monitoring... Kernel monitoring activated. Issue Ctrl-C to stop monitoring 1 drops at netlink_unicast+251 15 drops at unix_stream_recvmsg+32a 3 drops at unix_stream_connect+1dc

-l kas的意思是获取drop点的符号信息,这样的话针对源码就可以分析出来丢包的地方。

同学们可以参考这篇文章(Using netstat and dropwatch to observe packet loss on Linux servers):http://prefetch.net/blog/index.php/2011/07/11/using-netstat-and-dropwatch-to-observe-packet-loss-on-linux-servers/

那他的原理是什么呢?在解释原理之前,我们先看下这个工具的对等的stap脚本:

$ cat /usr/share/doc/systemtap-1.6/examples/network/dropwatch.stp

#!/usr/bin/stap

############################################################

# Dropwatch.stp

# Author: Neil Horman <nhorman@redhat.com>

# An example script to mimic the behavior of the dropwatch utility

# http://fedorahosted.org/dropwatch

############################################################

# Array to hold the list of drop points we find

global locations

# Note when we turn the monitor on and off

probe begin { printf("Monitoring for dropped packets\n") }

probe end { printf("Stopping dropped packet monitor\n") }

# increment a drop counter for every location we drop at

probe kernel.trace("kfree_skb") { locations[$location] <<< 1 }

# Every 1 seconds report our drop locations

probe timer.sec(1)

{

printf("\n")

foreach (l in locations-) {

printf("%d packets dropped at %s\n",

@count(locations[l]), symname(l))

}

delete locations

}

这个脚本核心的地方就在于这行:

probe kernel.trace(“kfree_skb”) { locations[$location] <<< 1 }

当kfree_skb被调用的时候,内核就记录下这个drop协议栈的位置,同时透过netlink通知dropwatch用户态的部分收集这个位置,同时把它整理显示出来.以上就是dropwatch的工作流程。

现在的问题是内核什么地方,什么时候会调用kfree_skb这个函数呢? 我们继续追查下:

dropwatch需要对内核打patch,当然RHEL5U4以上的内核都已经打了patch。

patch在这里下载:https://fedorahosted.org/releases/d/r/dropwatch/dropwatch_kernel_patches.tbz2

通过查看里面的5个patch,我们知道drop主要修改了以下几个文件:

include/linux/netlink.h

include/trace/skb.h

net/core/Makefile

net/core/net-traces.c

include/linux/skbuff.h

net/core/datagram.c

include/linux/net_dropmon.h

net/core/drop_monitor.c

include/linux/Kbuild

net/Kconfig

net/core/Makefile

我们透过RHEL5U4的代码可以清楚的看到:

//include/linux/skbuff.h extern void kfree_skb(struct sk_buff *skb); extern void consume_skb(struct sk_buff *skb);

这些patch的作用是使得支持dropwatch的内核把kfree_skb分成二类: 1. 人畜无害的调用consume_skb 2. 需要丢包的调用kfree_skb 同时提供基础的netlink通信往用户空间传递位置信息。

知道了这点,我们在RHEL 5U4的源码目录下: linux-2.6.18.x86_64/net/ipv4 或者 linux-2.6.18.x86_64/net/core下 grep下

$ grep -rin kfree_skb . ./tcp_input.c:3122: __kfree_skb(skb); ./tcp_input.c:3219: __kfree_skb(skb); ./tcp_input.c:3234: __kfree_skb(skb); ./tcp_input.c:3318: __kfree_skb(skb); ... ./ip_fragment.c:166:static __inline__ void frag_kfree_skb(struct sk_buff *skb, int *work) ./ip_fragment.c:171: kfree_skb(skb); ./ip_fragment.c:211: frag_kfree_skb(fp, work); ./ip_fragment.c:452: frag_kfree_skb(fp, NULL); ./ip_fragment.c:578: frag_kfree_skb(free_it, NULL); ./ip_fragment.c:607: kfree_skb(skb); ./ip_fragment.c:732: kfree_skb(skb); ./udp.c:1028: kfree_skb(skb); ./udp.c:1049: kfree_skb(skb); ./udp.c:1069: kfree_skb(skb); ./udp.c:1083: kfree_skb(skb); ./udp.c:1134: kfree_skb(skb1); ./udp.c:1229: kfree_skb(skb); ./udp.c:1242: kfree_skb(skb); ./udp.c:1258: kfree_skb(skb); ./udp.c:1418: kfree_skb(skb); ./ip_sockglue.c:286: kfree_skb(skb); ./ip_sockglue.c:322: kfree_skb(skb); ./ip_sockglue.c:398: kfree_skb(skb); ./devinet.c:1140: kfree_skb(skb); ./xfrm4_ninput.c:29: kfree_skb(skb); ./xfrm4_ninput.c:122: kfree_skb(skb); ./tunnel4.c:85: kfree_skb(skb); ./icmp.c:47: * and moved all kfree_skb() up to ./icmp.c:1046: kfree_skb(skb); ./ip_forward.c:121: kfree_skb(skb); ./netfilter.c:73: kfree_skb(*pskb); ./netfilter.c:114: kfree_skb(*pskb); ./netfilter/ip_queue.c:277: kfree_skb(skb); ./netfilter/ip_queue.c:335: kfree_skb(nskb); ./netfilter/ip_queue.c:373: kfree_skb(e->skb); ./netfilter/ip_queue.c:556: kfree_skb(skb); ... ./netfilter/ipt_TCPMSS.c:153: kfree_skb(*pskb); ./netfilter/ipt_ULOG.c:435: kfree_skb(ub->skb); ./tcp.c:1458: kfree_skb(skb); ./tcp.c:1577: __kfree_skb(skb); ./ip_gre.c:482: kfree_skb(skb2); ./ip_gre.c:497: kfree_skb(skb2); ./ip_gre.c:504: kfree_skb(skb2); ... ./ip_gre.c:892: dev_kfree_skb(skb); ./raw.c:244: kfree_skb(skb); ./raw.c:254: kfree_skb(skb); ./raw.c:329: kfree_skb(skb); ./tcp_ipv4.c:1039: kfree_skb(skb); ./tcp_ipv4.c:1151: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:213: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:290: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:374: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:378: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:423: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:480: kfree_skb(skb); ./ipvs/ip_vs_xmit.c:553: dev_kfree_skb(skb); ./ipvs/ip_vs_core.c:197: kfree_skb(*pskb); ./ipvs/ip_vs_core.c:829: kfree_skb(*pskb); ./ipconfig.c:504: kfree_skb(skb); ./ipconfig.c:1019: kfree_skb(skb); ./xfrm4_input.c:50: kfree_skb(skb); ./xfrm4_input.c:162: kfree_skb(skb); ./fib_semantics.c:292: kfree_skb(skb); ./ipip.c:414: kfree_skb(skb2); ./ipip.c:429: kfree_skb(skb2); ./ipip.c:436: kfree_skb(skb2); ./ipip.c:444: kfree_skb(skb2); ./ipip.c:458: kfree_skb(skb2); ./ipip.c:482: kfree_skb(skb); ./ipip.c:609: dev_kfree_skb(skb); ./ipip.c:615: dev_kfree_skb(skb); ./ipip.c:654: dev_kfree_skb(skb); ./ipmr.c:185: kfree_skb(skb); ... ./ip_output.c:763: kfree_skb(skb); ./ip_output.c:964: kfree_skb(skb); ./ip_output.c:1313: kfree_skb(skb);

我们可以看到一堆可以丢包的点。

当我们观察到dropwatch发现丢包的时候,可以根据符号信息结合以上的源码轻松的分析出来问题所在。

小结: 工具是知识的积累。

祝玩得开心!

Post Footer automatically generated by wp-posturl plugin for wordpress.

Orz…

binutils-static is needed by dropwatch-1.4-0.x86_64

5u下面缺这个,俺实在搞不定了…霸业支招啊

Yu Feng Reply:

March 10th, 2013 at 6:52 pm

下源码编译吧

你好我用dropwatch抓到的记录是

458 drops at location 0xffffffff814a5d9e

没有显示syscall 函数名字 只有堆栈地址 这是什么问题

Yu Feng Reply:

April 25th, 2013 at 11:15 am

内核符号需要安装对

lixianliang Reply:

April 27th, 2013 at 10:27 am

找到问题了 需要root下执行dropwatch才能看到系统调用

您好。

最近一直被丢包的问题困扰,我们部署了两台服务器,一台是DELL R910物理服务器,安装ORACLE Linux6.3,一台为vmware vphere 5.1上的客户机,安装ORACLE Linux6.3,两台机器发现一直丢包,收集的数据如下,麻烦您分析一下是什么问题,谢谢。

[root@orcl4dev ~]# dropwatch -l kas

Initalizing kallsyms db

dropwatch> start

Enabling monitoring…

Kernel monitoring activated.

Issue Ctrl-C to stop monitoring

1 drops at netlink_unicast+249

6 drops at __udp4_lib_mcast_deliver+364

13 drops at __udp4_lib_mcast_deliver+364

1 drops at __netif_receive_skb+48a

11 drops at __udp4_lib_mcast_deliver+364

7 drops at __udp4_lib_mcast_deliver+364

7 drops at __udp4_lib_mcast_deliver+364

8 drops at __udp4_lib_mcast_deliver+364

5 drops at __udp4_lib_mcast_deliver+364

9 drops at __udp4_lib_mcast_deliver+364

9 drops at __udp4_lib_mcast_deliver+364

1 drops at __netif_receive_skb+48a

4 drops at __udp4_lib_mcast_deliver+364

5 drops at __udp4_lib_mcast_deliver+364

1 drops at __netif_receive_skb+48a

5 drops at __udp4_lib_mcast_deliver+364

6 drops at __udp4_lib_mcast_deliver+364

9 drops at __udp4_lib_mcast_deliver+364

5 drops at __udp4_lib_mcast_deliver+364

7 drops at __udp4_lib_mcast_deliver+364

1 drops at __netif_receive_skb+48a

9 drops at __udp4_lib_mcast_deliver+364

3 drops at __netif_receive_skb+48a

1 drops at ip_rcv_finish+176

7 drops at __udp4_lib_mcast_deliver+364

2 drops at ip_rcv_finish+176

7 drops at __udp4_lib_mcast_deliver+364

1 drops at ip_rcv_finish+176

2 drops at ip_rcv_finish+176

4 drops at __udp4_lib_mcast_deliver+364

4 drops at __udp4_lib_mcast_deliver+364

1 drops at ip_rcv_finish+176

5 drops at __udp4_lib_mcast_deliver+364

2 drops at ip_rcv_finish+176

7 drops at __udp4_lib_mcast_deliver+364

8 drops at __udp4_lib_mcast_deliver+364

11 drops at __udp4_lib_mcast_deliver+364

2 drops at ip_rcv_finish+176

7 drops at __udp4_lib_mcast_deliver+364

1 drops at __netif_receive_skb+48a

1 drops at ip_rcv_finish+176

2 drops at ip_rcv_finish+176

3 drops at __udp4_lib_mcast_deliver+364

^CGot a stop message

dropwatch> exit

Shutting down …

[root@orcl4dev ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:1C:A1:18

inet addr:192.168.8.235 Bcast:192.168.8.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe1c:a118/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:2903 errors:0 dropped:54 overruns:0 frame:0

TX packets:165 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:252383 (246.4 KiB) TX bytes:22473 (21.9 KiB)

[root@orcl4dev ~]#

Yu Feng Reply:

July 2nd, 2013 at 5:45 pm

我也跟进下这个问题

还有一个疑问,从ifconfig 与dropwatch看到的DROP包的数量是不同的,两个统计的方式是不一样的吧?

另外补充一下NETSTAT数据如下:

[root@orcl4dev ~]# netstat -s

Ip:

4956 total packets received

108 with invalid addresses

0 forwarded

0 incoming packets discarded

4848 incoming packets delivered

194 requests sent out

Icmp:

0 ICMP messages received

0 input ICMP message failed.

ICMP input histogram:

4 ICMP messages sent

0 ICMP messages failed

ICMP output histogram:

destination unreachable: 4

IcmpMsg:

OutType3: 4

Tcp:

4 active connections openings

2 passive connection openings

4 failed connection attempts

0 connection resets received

2 connections established

172 segments received

149 segments send out

1 segments retransmited

0 bad segments received.

4 resets sent

Udp:

38 packets received

4 packets to unknown port received.

0 packet receive errors

42 packets sent

UdpLite:

TcpExt:

2 delayed acks sent

38 packets header predicted

29 acknowledgments not containing data received

76 predicted acknowledgments

0 TCP data loss events

1 other TCP timeouts

1 DSACKs received

IpExt:

InMcastPkts: 38

OutMcastPkts: 20

InBcastPkts: 4632

InOctets: 456771

OutOctets: 24779

InMcastOctets: 7073

OutMcastOctets: 3459

InBcastOctets: 423801

Orz…

最近也被丢包问题困扰。

希望能dropwatch能借助dropwatch定位一些问题。

现在抓包发现重传的概率有点大,但是系统层面的负载并不高;但是让人查交换机难度又有点大;

只能先从系统层面入手了~

请问一下我在ubuntu 1410系统下面运行dropwatch时,发现系统不支持NET_DM,该如何解决啊。

crystal@crystal:~/dropwatch/src$ ./dropwatch

Unable to find NET_DM family, dropwatch can’t work

Cleaning up on socket creation error

crystal Reply:

December 2nd, 2015 at 9:59 am

不好意思,最后仔细查了一下发现支持NET_DM需要内核对应选项支持

CONFIG_NET_DROP_MONITOR=m

然后加载模块就可以了

modprobe drop_monitor