详解服务器内存带宽计算和使用情况测量

原创文章,转载请注明: 转载自系统技术非业余研究

本文链接地址: 详解服务器内存带宽计算和使用情况测量

前段时间我们在MYSQL调优上发现有瓶颈,怀疑是过多拷贝内存,导致内存带宽用完。在Linux下CPU的使用情况有top工具, IO设备的使用情况有iostat工具,就是没有内存使用情况的测量工具。 我们可以看到大量的memcpy和字符串拷贝(可以用systemtap来测量),但是像简单的数据移动操作就无法统计,我们希望在硬件层面有办法可以查到CPU在过去的一段时间内总共对主存系统发起了多少读写字节数。

所以我们内存测量的的目标就归结为二点:1. 目前我们这样的服务器真正的内存带宽是多少。 2. 我们的应用到底占用了多少带宽。

首先来看下我们的服务器配置情况:

$ sudo ~/aspersa/summary

# Aspersa System Summary Report ##############################

Date | 2011-09-12 11:23:11 UTC (local TZ: CST +0800)

Hostname | my031121.sqa.cm4

Uptime | 13 days, 3:52, 2 users, load average: 0.02, 0.01, 0.00

System | Dell Inc.; PowerEdge R710; vNot Specified (<OUT OF SPEC>)

Service Tag | DHY6S2X

Release | Red Hat Enterprise Linux Server release 5.4 (Tikanga)

Kernel | 2.6.18-164.el5

Architecture | CPU = 64-bit, OS = 64-bit

Threading | NPTL 2.5

Compiler | GNU CC version 4.1.2 20080704 (Red Hat 4.1.2-44).

SELinux | Disabled

# Processor ##################################################

Processors | physical = 2, cores = 12, virtual = 24, hyperthreading = yes

Speeds | 24x2926.089

Models | 24xIntel(R) Xeon(R) CPU X5670 @ 2.93GHz

Caches | 24x12288 KB

# Memory #####################################################

Total | 94.40G

Free | 4.39G

Used | physical = 90.01G, swap = 928.00k, virtual = 90.01G

Buffers | 1.75G

Caches | 7.85G

Used | 78.74G

Swappiness | vm.swappiness = 0

DirtyPolicy | vm.dirty_ratio = 40, vm.dirty_background_ratio = 10

Locator Size Speed Form Factor Type Type Detail

========= ======== ================= ============= ============= ===========

DIMM_A1 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A2 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A3 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A4 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A5 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A6 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B1 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B2 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B3 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B4 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B5 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_B6 8192 MB 1333 MHz (0.8 ns) DIMM {OUT OF SPEC} Synchronous

DIMM_A7 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

DIMM_A8 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

DIMM_A9 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

DIMM_B7 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

DIMM_B8 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

DIMM_B9 {EMPTY} Unknown DIMM {OUT OF SPEC} Synchronous

...

DELL R710的机器上有2个X5670CPU,每个上面有6个core,超线程,所以共有24个逻辑CPU。上面插了12根 8192MB(1333 MHz)内存条。

我们的机器架构从之前的FSB总线结构变成现在的numa架构,谢谢@fcicq提供的信息,请参考下图(来源):

我们可以清楚的看到每个CPU都有自己的内存控制器直接连接到内存去,而且有3个通道, CPU直接通过QPI连接。 内存控制器和QPI上面都会流动数据。

我们服务器用的是DDR3内存,所以我们需要计算下在这样的架构下我们内存的带宽。

DDR3内存带宽如何计算,参看 这里

从配置信息可以看到我的服务器的内存条: DIMM_A1 8192 MB 1333 MHz (0.8 ns), 有12根,每个CPU连接6根。

根据文章我们计算如下:每个通道 (1333/8)*64*8 /8 = 10.6G Byte;而我们的CPU是3个通道的,也就是说这个CPU的总的内存带宽是 10.6*3=31.8G;我的机器有2个CPU,所以总的通道是63.6G, 理论上是这样的对吧(有错,请纠正我),我们等下实际测量下。

从上面的计算,显然内存带宽不是瓶颈。那问题出在那里呢,我们继续看!

我们需要个工具能够搬动内存的工具。这类工具测试处理的带宽是指在一个线程能跑出的最大内存带宽。 挑个简单的mbw(mbw – Memory BandWidth benchmark)来玩下:

$ sudo apt-get install mbw $ mbw -q -n 1 256 0 Method: MEMCPY Elapsed: 0.19652 MiB: 256.00000 Copy: 1302.647 MiB/s AVG Method: MEMCPY Elapsed: 0.19652 MiB: 256.00000 Copy: 1302.647 MiB/s 0 Method: DUMB Elapsed: 0.12668 MiB: 256.00000 Copy: 2020.840 MiB/s AVG Method: DUMB Elapsed: 0.12668 MiB: 256.00000 Copy: 2020.840 MiB/s 0 Method: MCBLOCK Elapsed: 0.02945 MiB: 256.00000 Copy: 8693.585 MiB/s AVG Method: MCBLOCK Elapsed: 0.02945 MiB: 256.00000 Copy: 8693.585 MiB/s

CPU内存带宽测量工具方面,在@王王争 同学大力帮助下,同时给我详尽地介绍了PTU(intel-performance-tuning-utility),在 这里 可以下载。

解开下载的二进制包后,bin里面带的vtbwrun就是我们的硬件层面的内存带宽使用测量工具,下面是使用帮助:

$ sudo ptu40_005_lin_intel64/bin/vtbwrun --help

***********************************

PTU

Performance Tuning Utility

For

Nehalem

Ver 0.4

***********************************

Usage: ./ptu [-c] [-i <iterations>] [-A] [-r] [-p] [-w]

-c disable CPU check.

-i <iterations> specify how many iterations PTU should run.

-A Automated mode, no user Input.

-r Monitor QHL read/write requests from the IOH

*************************** EXCLUSIVE ******************************

-p Monitor partial writes on Memory Channel 0,1,2

-w Monitor WriteBack, Conflict event

*************************** EXCLUSIVE ******************************

$ sudo ptu40_005_lin_intel64/bin/vtbwrun -c -A

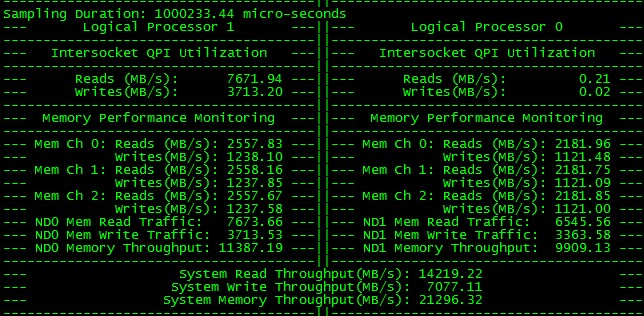

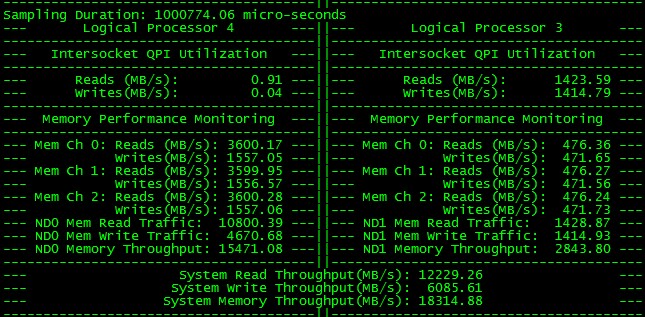

运行期截图如下:

该图清楚的指出System Memory Throughput(MB/s): 13019.45, 其中QPI也消耗挺大的。

此外,我们还需要知道 CPU的topology结构,也就是说每个操作系统的CPU对应到哪个CPU哪个核心的哪个超线程去。有了这个信息,我们才能用taskset把内存测试工具绑定到指定的CPU去,才能精确的观察内存使用情况。CPU topology参看这里

$ sudo ./cpu_topology64.out

Advisory to Users on system topology enumeration

This utility is for demonstration purpose only. It assumes the hardware topology

configuration within a coherent domain does not change during the life of an OS

session. If an OS support advanced features that can change hardware topology

configurations, more sophisticated adaptation may be necessary to account for

the hardware configuration change that might have added and reduced the number

of logical processors being managed by the OS.

User should also`be aware that the system topology enumeration algorithm is

based on the assumption that CPUID instruction will return raw data reflecting

the native hardware configuration. When an application runs inside a virtual

machine hosted by a Virtual Machine Monitor (VMM), any CPUID instructions

issued by an app (or a guest OS) are trapped by the VMM and it is the VMM's

responsibility and decision to emulate/supply CPUID return data to the virtual

machines. When deploying topology enumeration code based on querying CPUID

inside a VM environment, the user must consult with the VMM vendor on how an VMM

will emulate CPUID instruction relating to topology enumeration.

Software visible enumeration in the system:

Number of logical processors visible to the OS: 24

Number of logical processors visible to this process: 24

Number of processor cores visible to this process: 12

Number of physical packages visible to this process: 2

Hierarchical counts by levels of processor topology:

# of cores in package 0 visible to this process: 6 .

# of logical processors in Core 0 visible to this process: 2 .

# of logical processors in Core 1 visible to this process: 2 .

# of logical processors in Core 2 visible to this process: 2 .

# of logical processors in Core 3 visible to this process: 2 .

# of logical processors in Core 4 visible to this process: 2 .

# of logical processors in Core 5 visible to this process: 2 .

# of cores in package 1 visible to this process: 6 .

# of logical processors in Core 0 visible to this process: 2 .

# of logical processors in Core 1 visible to this process: 2 .

# of logical processors in Core 2 visible to this process: 2 .

# of logical processors in Core 3 visible to this process: 2 .

# of logical processors in Core 4 visible to this process: 2 .

# of logical processors in Core 5 visible to this process: 2 .

Affinity masks per SMT thread, per core, per package:

Individual:

P:0, C:0, T:0 --> 1

P:0, C:0, T:1 --> 1z3

Core-aggregated:

P:0, C:0 --> 1001

Individual:

P:0, C:1, T:0 --> 4

P:0, C:1, T:1 --> 4z3

Core-aggregated:

P:0, C:1 --> 4004

Individual:

P:0, C:2, T:0 --> 10

P:0, C:2, T:1 --> 1z4

Core-aggregated:

P:0, C:2 --> 10010

Individual:

P:0, C:3, T:0 --> 40

P:0, C:3, T:1 --> 4z4

Core-aggregated:

P:0, C:3 --> 40040

Individual:

P:0, C:4, T:0 --> 100

P:0, C:4, T:1 --> 1z5

Core-aggregated:

P:0, C:4 --> 100100

Individual:

P:0, C:5, T:0 --> 400

P:0, C:5, T:1 --> 4z5

Core-aggregated:

P:0, C:5 --> 400400

Pkg-aggregated:

P:0 --> 555555

Individual:

P:1, C:0, T:0 --> 2

P:1, C:0, T:1 --> 2z3

Core-aggregated:

P:1, C:0 --> 2002

Individual:

P:1, C:1, T:0 --> 8

P:1, C:1, T:1 --> 8z3

Core-aggregated:

P:1, C:1 --> 8008

Individual:

P:1, C:2, T:0 --> 20

P:1, C:2, T:1 --> 2z4

Core-aggregated:

P:1, C:2 --> 20020

Individual:

P:1, C:3, T:0 --> 80

P:1, C:3, T:1 --> 8z4

Core-aggregated:

P:1, C:3 --> 80080

Individual:

P:1, C:4, T:0 --> 200

P:1, C:4, T:1 --> 2z5

Core-aggregated:

P:1, C:4 --> 200200

Individual:

P:1, C:5, T:0 --> 800

P:1, C:5, T:1 --> 8z5

Core-aggregated:

P:1, C:5 --> 800800

Pkg-aggregated:

P:1 --> aaaaaa

APIC ID listings from affinity masks

OS cpu 0, Affinity mask 00000001 - apic id 20

OS cpu 1, Affinity mask 00000002 - apic id 0

OS cpu 2, Affinity mask 00000004 - apic id 22

OS cpu 3, Affinity mask 00000008 - apic id 2

OS cpu 4, Affinity mask 00000010 - apic id 24

OS cpu 5, Affinity mask 00000020 - apic id 4

OS cpu 6, Affinity mask 00000040 - apic id 30

OS cpu 7, Affinity mask 00000080 - apic id 10

OS cpu 8, Affinity mask 00000100 - apic id 32

OS cpu 9, Affinity mask 00000200 - apic id 12

OS cpu 10, Affinity mask 00000400 - apic id 34

OS cpu 11, Affinity mask 00000800 - apic id 14

OS cpu 12, Affinity mask 00001000 - apic id 21

OS cpu 13, Affinity mask 00002000 - apic id 1

OS cpu 14, Affinity mask 00004000 - apic id 23

OS cpu 15, Affinity mask 00008000 - apic id 3

OS cpu 16, Affinity mask 00010000 - apic id 25

OS cpu 17, Affinity mask 00020000 - apic id 5

OS cpu 18, Affinity mask 00040000 - apic id 31

OS cpu 19, Affinity mask 00080000 - apic id 11

OS cpu 20, Affinity mask 00100000 - apic id 33

OS cpu 21, Affinity mask 00200000 - apic id 13

OS cpu 22, Affinity mask 00400000 - apic id 35

OS cpu 23, Affinity mask 00800000 - apic id 15

Package 0 Cache and Thread details

Box Description:

Cache is cache level designator

Size is cache size

OScpu# is cpu # as seen by OS

Core is core#[_thread# if > 1 thread/core] inside socket

AffMsk is AffinityMask(extended hex) for core and thread

CmbMsk is Combined AffinityMask(extended hex) for hw threads sharing cache

CmbMsk will differ from AffMsk if > 1 hw_thread/cache

Extended Hex replaces trailing zeroes with 'z#'

where # is number of zeroes (so '8z5' is '0x800000')

L1D is Level 1 Data cache, size(KBytes)= 32, Cores/cache= 2, Caches/package= 6

L1I is Level 1 Instruction cache, size(KBytes)= 32, Cores/cache= 2, Caches/package= 6

L2 is Level 2 Unified cache, size(KBytes)= 256, Cores/cache= 2, Caches/package= 6

L3 is Level 3 Unified cache, size(KBytes)= 12288, Cores/cache= 12, Caches/package= 1

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L1D | L1D | L1D | L1D | L1D | L1D |

Size | 32K | 32K | 32K | 32K | 32K | 32K |

OScpu#| 0 12| 2 14| 4 16| 6 18| 8 20| 10 22|

Core | c0_t0 c0_t1| c1_t0 c1_t1| c2_t0 c2_t1| c3_t0 c3_t1| c4_t0 c4_t1| c5_t0 c5_t1|

AffMsk| 1 1z3| 4 4z3| 10 1z4| 40 4z4| 100 1z5| 400 4z5|

CmbMsk| 1001 | 4004 | 10010 | 40040 |100100 |400400 |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L1I | L1I | L1I | L1I | L1I | L1I |

Size | 32K | 32K | 32K | 32K | 32K | 32K |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L2 | L2 | L2 | L2 | L2 | L2 |

Size | 256K | 256K | 256K | 256K | 256K | 256K |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L3 |

Size | 12M |

CmbMsk|555555 |

+-----------------------------------------------------------------------------------+

Combined socket AffinityMask= 0x555555

Package 1 Cache and Thread details

Box Description:

Cache is cache level designator

Size is cache size

OScpu# is cpu # as seen by OS

Core is core#[_thread# if > 1 thread/core] inside socket

AffMsk is AffinityMask(extended hex) for core and thread

CmbMsk is Combined AffinityMask(extended hex) for hw threads sharing cache

CmbMsk will differ from AffMsk if > 1 hw_thread/cache

Extended Hex replaces trailing zeroes with 'z#'

where # is number of zeroes (so '8z5' is '0x800000')

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L1D | L1D | L1D | L1D | L1D | L1D |

Size | 32K | 32K | 32K | 32K | 32K | 32K |

OScpu#| 1 13| 3 15| 5 17| 7 19| 9 21| 11 23|

Core | c0_t0 c0_t1| c1_t0 c1_t1| c2_t0 c2_t1| c3_t0 c3_t1| c4_t0 c4_t1| c5_t0 c5_t1|

AffMsk| 2 2z3| 8 8z3| 20 2z4| 80 8z4| 200 2z5| 800 8z5|

CmbMsk| 2002 | 8008 | 20020 | 80080 |200200 |800800 |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L1I | L1I | L1I | L1I | L1I | L1I |

Size | 32K | 32K | 32K | 32K | 32K | 32K |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L2 | L2 | L2 | L2 | L2 | L2 |

Size | 256K | 256K | 256K | 256K | 256K | 256K |

+-------------+-------------+-------------+-------------+-------------+-------------+

Cache | L3 |

Size | 12M |

CmbMsk|aaaaaa |

+-----------------------------------------------------------------------------------+

#或者最简单的方法,让Erlang告诉我们

$ erl

Erlang R14B04 (erts-5.8.5) [source] [64-bit] [smp:2:2] [rq:2] [async-threads:0] [hipe] [kernel-poll:false]

Eshell V5.8.5 (abort with ^G)

1> erlang:system_info(cpu_topology).

[{processor,[{core,[{thread,{logical,1}},

{thread,{logical,13}}]},

{core,[{thread,{logical,3}},{thread,{logical,15}}]},

{core,[{thread,{logical,5}},{thread,{logical,17}}]},

{core,[{thread,{logical,7}},{thread,{logical,19}}]},

{core,[{thread,{logical,9}},{thread,{logical,21}}]},

{core,[{thread,{logical,11}},{thread,{logical,23}}]}]},

{processor,[{core,[{thread,{logical,0}},

{thread,{logical,12}}]},

{core,[{thread,{logical,2}},{thread,{logical,14}}]},

{core,[{thread,{logical,4}},{thread,{logical,16}}]},

{core,[{thread,{logical,6}},{thread,{logical,18}}]},

{core,[{thread,{logical,8}},{thread,{logical,20}}]},

{core,[{thread,{logical,10}},{thread,{logical,22}}]}]}]

#我们顺手写个shell脚本可以运行多个mbw绑定在指定的CPU上

$ cat run_mbw.sh

#!/bin/bash

for i in $(seq $1 $3 $2)

do

echo $i

taskset -c $i mbw -q -n 9999 256 >/dev/null &

done

$ chmod +x run_mbw.sh

我们知道CPU0的逻辑CPU号码是奇数, CPU1的逻辑CPU号码是偶数.

只要运行 ./run_mbw from to increase 就达到目的.

有了这些工具,我们就可以做试验了,喝口水继续:

$ sudo ./run_mbw.sh 0 22 2 0 2 ...

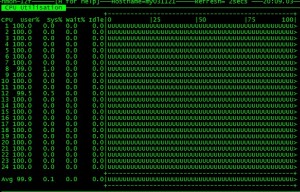

我们可以看到CPU的绑定情况,符合预期,见下图:

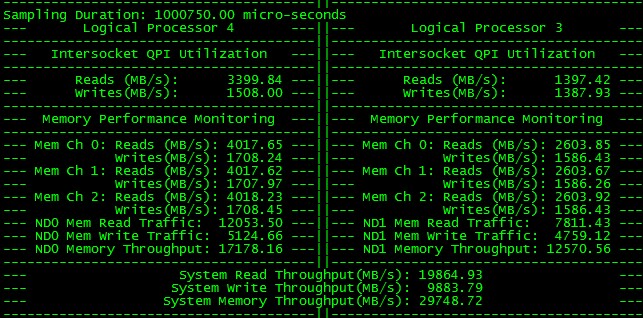

那么内存的带宽呢?看图:

有点问题,内存被2个CPU消耗,并且有QPI。

$ sudo./run_mbw.sh 1 23 2 1 3 ...

见下图

这时候所有的CPU已经跑满,那么内存的带宽呢?看图:

我们看出这台机器内存最大带宽32G。

我们很奇怪呀,为什么会出现这种情况呢?CPU并没有按照预期消耗自己的那部分内存,而且QPI的消耗也很大。

究根本原因是我们在操作系统启动的时候把numa给关掉了,避免mysqld在大内存的情况下引起swap.

我们来确认下:

# cat /proc/cmdline ro root=LABEL=/ numa=off console=tty0 console=ttyS1,115200

找到原因就好办了。没关系,我们重新找台没有关numa的机器,实验如下:

$ cat /proc/cmdline

ro root=LABEL=/ console=tty0 console=ttyS0,9600 #确实没关numa, 现在机器有2个node

$ sudo ~/aspersa/summary

# Aspersa System Summary Report ##############################

Date | 2011-09-12 12:26:18 UTC (local TZ: CST +0800)

Hostname | my031089

Uptime | 18 days, 10:33, 2 users, load average: 0.04, 0.01, 0.00

System | Huawei Technologies Co., Ltd.; Tecal RH2285; vV100R001 (Main Server Chassis)

Service Tag | 210231771610B4001897

Release | Red Hat Enterprise Linux Server release 5.4 (Tikanga)

Kernel | 2.6.18-164.el5

Architecture | CPU = 64-bit, OS = 64-bit

Threading | NPTL 2.5

Compiler | GNU CC version 4.1.2 20080704 (Red Hat 4.1.2-44).

SELinux | Disabled

# Processor ##################################################

Processors | physical = 2, cores = 8, virtual = 16, hyperthreading = yes

Speeds | 16x1600.000

Models | 16xIntel(R) Xeon(R) CPU E5620 @ 2.40GHz

Caches | 16x12288 KB

# Memory #####################################################

Total | 23.53G

Free | 8.40G

Used | physical = 15.13G, swap = 20.12M, virtual = 15.15G

Buffers | 863.79M

Caches | 4.87G

Used | 8.70G

Swappiness | vm.swappiness = 60

DirtyPolicy | vm.dirty_ratio = 40, vm.dirty_background_ratio = 10

Locator Size Speed Form Factor Type Type Detail

========= ======== ================= ============= ============= ===========

DIMM_A0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_B0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_C0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_D0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_E0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_F0 4096 MB 1066 MHz (0.9 ns) DIMM {OUT OF SPEC} Other

DIMM_A1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

DIMM_B1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

DIMM_C1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

DIMM_D1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

DIMM_E1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

DIMM_F1 {EMPTY} Unknown DIMM {OUT OF SPEC} Other

...

$ erl

Erlang R14B03 (erts-5.8.4) [source] [64-bit] [smp:16:16] [rq:16] [async-threads:0] [hipe] [kernel-poll:false]

Eshell V5.8.4 (abort with ^G)

1> erlang:system_info(cpu_topology).

erlang:system_info(cpu_topology).

[{node,[{processor,[{core,[{thread,{logical,4}},

{thread,{logical,12}}]},

{core,[{thread,{logical,5}},{thread,{logical,13}}]},

{core,[{thread,{logical,6}},{thread,{logical,14}}]},

{core,[{thread,{logical,7}},{thread,{logical,15}}]}]}]},

{node,[{processor,[{core,[{thread,{logical,0}},

{thread,{logical,8}}]},

{core,[{thread,{logical,1}},{thread,{logical,9}}]},

{core,[{thread,{logical,2}},{thread,{logical,10}}]},

{core,[{thread,{logical,3}},{thread,{logical,11}}]}]}]}]

2>

这是一台有2个E5620 CPU的华为生产的机器,总共16个逻辑CPU,其中逻辑CPU4-7,12-15对应于物理CPU0, 逻辑CPU0-3,8-11对应于物理CPU1。

$ ./run_mbw.sh 4 5 1 4 5

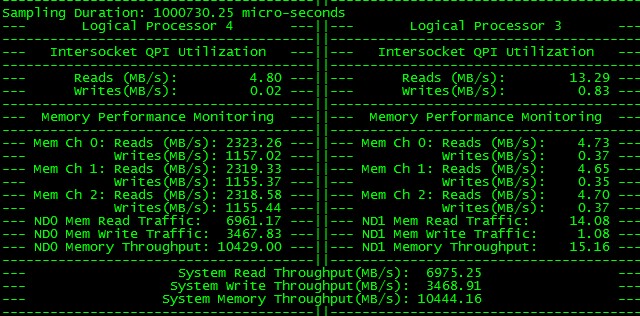

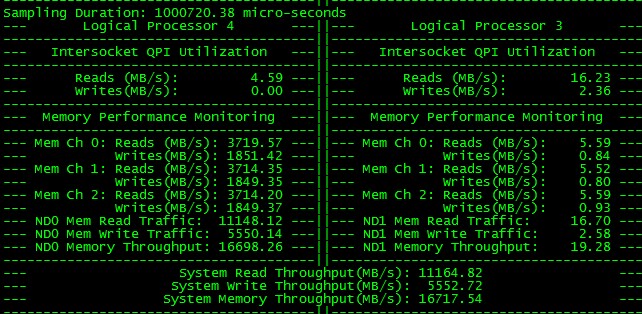

这时候的内存带宽图:

从图中我们可以看出2个mbw绑定在CPU 0上消耗了10.4G带宽,CPU1无消耗,QPI无消耗,符合预期。

继续加大压力:

$ ./run_mbw.sh 6 7 1 6 7

$ ./run_mbw.sh 12 15 1 12 13 14 15

我们看到线程数目加大,但是内存带宽保持不变,说明已经到瓶颈了。该CPU瓶颈16G。

我们在另外一个CPU1上实验下:

$ ./run_mbw.sh 0 3 1 0 1 2 3 $ ./run_mbw.sh 8 11 1 8 9 10 11

一下子把压力全加上去,看截图:

从图中我们可以看出CPU0,CPU1都消耗差不多15G的带宽,总带宽达到30G。

到此我们很明白我们如何计算我们的服务器带宽,以及如何测量目前的带宽使用情况,是不是很有意思?

非常感谢互联网让我们解决问题这么迅速。

BTW:测量出来的带宽和理论差距一倍,是不是我哪里计算错了,请大侠们帮我解惑,谢谢!

后记: 在华为的机器上内存是1066MHZ的,理论上的每通道带宽是 1066*8=8.52G,每个CPU有25.56G, 从截图中可以看到CPU0的带宽达到17.17G,那么已经达到理论峰值的67.2%. 侧面验证了DDR的带宽算法是正确的,再次谢谢@王王争 同学。

祝玩得开心!

Post Footer automatically generated by wp-posturl plugin for wordpress.

好文章,很深入!

Yu Feng Reply:

September 12th, 2011 at 9:50 pm

谢谢度哥鼓励!

不错,仔细看

x5500三通道分别用两根DIMM,主频1066. x5600是多少不确定。

分析的非常棒,转下此文!!

Yu Feng Reply:

September 13th, 2011 at 9:27 am

多谢支持

很深入了 看了很有帮助

学习了,很多不懂,慢慢消化。

下面一句应该怎么理解?第一段:

在Linux下内存的使用情况有top工具, IO设备的使用情况有iostat工具,就是没有内存使用情况的测量工具。

Yu Feng Reply:

September 13th, 2011 at 11:05 am

笔误:应该是CPU,已改,多谢指正!

是用的实验中得图么?RedHat的发行版本怎么用的是APT?虽然RedHat上也有apt工具但是应该不是吧

Yu Feng Reply:

September 13th, 2011 at 9:39 pm

apt-get install mbw只是想告诉你这个包的名字是mbw.

当然在RHEL 5U4是这么安装的:

ubuntu:

apt-get source mbw

scp -r mbw* ip:/tmp

5U4:

cd mbw??

make

谢谢!

看你的情况应该是内存插的问题导致到不了理论值,邮件解释

Yu Feng Reply:

September 15th, 2011 at 6:19 pm

非常专业的解答,谢谢!

liuli Reply:

August 13th, 2013 at 3:52 pm

各位前辈,内存插法如何才能达到理论值呢?感谢帮助~

Yu Feng Reply:

August 18th, 2013 at 1:05 pm

问问厂家应该就好。

liuli Reply:

August 26th, 2013 at 8:33 am

intel那个PTU可以传给我一份吗?在intel官网已经下不到了~感谢~

Yu Feng Reply:

August 27th, 2013 at 4:07 pm

我的好像也不见了。

第一次到访,拜读余锋大大的作品。

Yu Feng Reply:

September 15th, 2011 at 6:18 pm

你老客气了,向你学习!

华为的服务器使用的是Xeon E5620 CPU。该款CPU最高只支持1066MHZ DDR3内存。

http://ark.intel.com/products/47925/Intel-Xeon-Processor-E5620-%2812M-Cache-2_40-GHz-5_86-GTs-Intel-QPI%29#infosectionmemoryspecifications

DELL R710使用的是Xeon E5670 CPU,可支持1333MHZ DDR3内存。

Yu Feng Reply:

October 14th, 2011 at 11:05 pm

谢谢您的宝贵信息!

你好,“这时候所有的CPU已经跑满,那么内存的带宽呢?看图:”

请问那个cpu是用什么工具查看到的?

Yu Feng Reply:

December 14th, 2011 at 2:51 pm

intel做的nmon, apt-get -y install nmon就好!

kelly Reply:

January 26th, 2016 at 11:22 am

nmon是IBM的^_^

只搞明白为什么R710只有31G/s的带宽,具体要看物理内存具体位置,一个物理核带3个通道(横向,带宽扩展),每个通道纵向容量扩展3个(容量扩展),按照楼主内存数量,12根,如果R710只有6根内存,只安装在6个通道上,平均带宽应该可以达到35G/s左右,但是每个通道纵向添加1根内存后,虽然没有跨CPU通过QPI传输,但是内存仍会只工作在DDR 3 1066。具体为什么会这样,我就不知到了。

我用Xeon 5520,在DDR3 1066下,6根内存,只插6个通道(1×6通道),也就是说在DDR3 1066全速带宽平均值就是31G/s

然后,我分别测试,结果和楼主一样,都是只有理论一半的带宽。双路还是看不出问题,我觉得我们只要在4路的机器上测试,真相就大白了。

满配3×2通道 12936.4MB/S

1×2通道 8179.31MB/S

满配3×6通道 26329.5MB/S

1×6通道 31087.9MB/S

我用Xeon 5650(刀片),只用6根内存,只插6个通道,平均速度是35G/S左右。

但是该机器多配了2根内存,也就是说只有2个通道后面拖了多余的两根,不均横的分布影响似乎更大,平均28G/S。

参考文档

http://i.dell.com/sites/content/business/solutions/whitepapers/en/Documents/poweredge-server-11gen-whitepaper-en.pdf

Yu Feng Reply:

December 17th, 2011 at 2:43 am

大赞研究精神~

上面贴错了,其实我是想贴这个:

http://www.dell.com/downloads/global/products/pedge/en/server-poweredge-r710-tech-guidebook.pdf

最近又接触了numa的一些文档,想起了这个问题。

lz在进行2个node测试的时候,产生了一些QPI交互,我的理解最好应该没有QPI产生最好。

如果Node0去调用Node1的内存,会带来额外开销,消耗cpu周期在跨node的寻址上,会带来高延迟,并降低带宽。最好用numactl 替代taskset,单独测试node0或者node1,这样效果会更好。

Yu Feng Reply:

April 20th, 2013 at 8:52 pm

这个和lz4库应该没有关系,而是使用者应该把进程和cpu绑定,就避免被操作系统调度错了,到了别的核去,引起QPI交互。

Yu Feng,

你好,请问可以发一份Intel的PTU给我使用一下么?现在已经找不到这个版本了,我的邮件是vstwins@21cn.com,十分感谢!

博主,关于计算内存带宽时使用的内存工作频率,是在机器上的实时工作频率,还是原本内存的频率(出厂时内存标签贴示频率)啊?

这两个计算出来的理论带宽还是有差距的呀。我的板子限定内存使用频率总会比那个理论频率低~

所以对于这个频率还比较关注~

感谢回复~

@@王王争 淘宝长仁

我在谷歌搜索下载到了,qq675881684