Erlang 网络密集型服务器的瓶颈和解决思路

原创文章,转载请注明: 转载自系统技术非业余研究

本文链接地址: Erlang 网络密集型服务器的瓶颈和解决思路

最近我们的Erlang IO密集型的服务器程序要做细致的性能提升,从每秒40万包处理提升到60万目标,需要对进程和IO调度器的原理很熟悉,并且对行为进行微调,花了不少时间参阅了相关的文档和代码。

其中最有价值的二篇文章是:

1. Characterizing the Scalability of Erlang VM on Many-core Processors 参见这里

2. Evaluate the benefits of SMP support for IO-intensive Erlang applications 参见这里

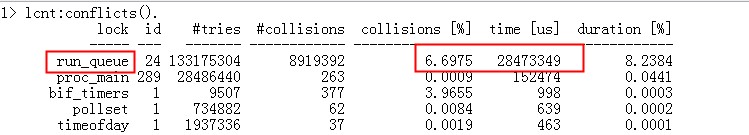

我们的性能瓶颈目前根据 lcnt 的提示:

2. erlang只有单个poll set, 大量的IO导致性能瓶颈,摘抄“Evaluate the benefits of SMP support for IO-intensive Erlang applications” P46的结论如下:

Finally, we analyzed how IO operations are handled by the Erlang VM, and that

was the bottleneck. The problem relies on the fact that there is only one global

poll-set where IO tasks are kept. Hence, only one scheduler at a time can call

erts_check_io (the responsible function for performing IO tasks) to obtain pending

tasks from the poll-set. So, a scheduler can finish its job, but it has to wait

idly for the other schedulers to complete their IO pending tasks before it starts

it own ones. In more details, for N scheduler, only one can call erts_check_io

regardless the load; the other N-1 schedulers will start spinning until they gain

access to erts_check_io() and finish executing their IO tasks. For a bigger number of

schedulers, more schedulers will spin, and more CPU time will be waisted on spinning.

This behavior was noticed even during our evaluations when running the tests in a 8

cores machine, apart from the 16 cores one. There are two conditions that determine

whether a scheduler can access erts_check_io, one is for a mutex variable named

“doing_sys_schedule” to be unlocked, and the other one is to make sure the variable

“erts_port_task_outstanding_io_tasks” reaches a value of 0 meaning that there is

no IO task to process in the whole system. If one of the conditions breaks and there

is no other task to process, the scheduler starts spinning. Emysql driver generates

a lot of processes to be executed by the schedulers since a new process is spawned

per each single insert or read request. By using Erlang etop tool, we can check the

lifetime of all the processes created in the Erlang VM. Their lifetime is extremely

short, and they do nothing else (CPU-related) apart from the IO requests. The

requests are serialized at this point because whenever a scheduler starts doing the

system scheduling, it locks the mutex variable “doing_sys_schedule”, and then calls

erts_check_io() function for finding pending IO tasks. These tasks are spread to the

other schedulers and are processed only when the two aforementioned conditions are

fulfilled.

这之前,我们提了相关的patch到erlang/otp团队了,但是没有获准合并,讨论见这里。官方承诺的multiple poll set功能都好几年也没见实现。

其中IO调度器的迁移逻辑,见第一篇paper, 简单的描述可以见这里

They migration logic does:

collect statistics about the maxlength of all scheduler’s runqueues

setup migration paths

Take away jobs from full-load schedulers and pushing jobs on low load scheduler queues

Running on full load or not! If all schedulers are not fully loaded, jobs will be migrated to schedulers with lower id’s and thus making some schedulers inactive.

在这种情况下,我们只能自己来动手解决了,erlang提供了统计信息来帮忙我们定位这个问题,我们演示下:

$ erl

Erlang R17A (erts-5.11) [source-e917f6d] [64-bit] [smp:16:16] [async-threads:10] [hipe] [kernel-poll:false] [type-assertions] [debug-compiled] [lock-checking] [systemtap]

Eshell V5.11 (abort with ^G)

1> erlang:system_flag(scheduling_statistics,enable).

true

2> erlang:system_info(scheduling_statistics).

[{process_max,0,0},

{process_high,0,0},

{process_normal,6234777,0},

{process_low,0,0},

{port,6234700,0}]

3> erlang:system_info(total_scheduling_statistics).

[{process_max,0,0},

{process_high,0,0},

{process_normal,7063305,0},

{process_low,0,0},

{port,7062620,0}]

注意上面的port,这个是指RQ上共调度了多少port操作,port也会在不同的调度器之间来回平衡。

对应下erl_process.c实现源码,我们可以知道数字的含义: 第一个数字调度器代表执行的进程数目,第二个为迁移的进程数目。

for (i = 0; i < ERTS_NO_PRIO_LEVELS; i++) {

prio[i] = erts_sched_stat.prio[i].name;

executed[i] = erts_sched_stat.prio[i].total_executed;

migrated[i] = erts_sched_stat.prio[i].total_migrated;

}

特别是迁移进程的时候,会引起大量的目标和源队列锁操作。如果观察到迁移的进程数目过多的话,我们就要考虑未公开的进程绑定选项{scheduler, N}在进程创建的时候把某个进程固定在调度器上,避免迁移。 具体操作可以参考这篇。

祝玩得开心!

Post Footer automatically generated by wp-posturl plugin for wordpress.

具体做法是系统自动采集统计信息然后根据阈值设置动态设置进程启动参数?还是离线分析统计信息?

Yu Feng Reply:

November 12th, 2013 at 9:44 pm

分析好程序行为,然后让进程固定在某个调度器上,不需要pull和push任务。